If you ask a developer for application performance management tips for Node.js apps, there's a nearly 100 percent chance that you'll be told to avoid synchronous work within your app. The reason why is simple enough: Node.js applications have only a single thread, and if you keep that thread busy with synchronous work, your app won't have capacity to handle incoming requests efficiently.

In a perfect world, all Node.js apps would be written to avoid synchronous work from the get-go. In the real world, though, that's obviously not the case. There are plenty of Node.js apps that are not optimized for performance because they have threads that take longer than they ideally would to execute. This results in what's called "blocked" threads, which occur when one client ties up a thread, depriving other clients from accessing it.

The problem from a Node.js application monitoring perspective is that – unless you have access to the source code of your Node.js app and are willing to spend hours poring over it to figure out how the app handles synchronous requests – it's very hard using traditional monitoring tools to know when your Node app is experiencing blocked thread issues.

Fortunately, there's a way to square this circle. It involves monitoring what's known as the event loop, which provides a level of visibility that you don't get from monitoring Node apps in a generic way. By focusing on the event loop, it becomes possible to identify situations where thread blocking issues are the weakest link in a Node app's performance – something that you can't do using APM tools that monitor request latency in a generic way.

To explain why event loop monitoring is the key to effective Node monitoring, let’s walk through the nitty-gritty details of how event loops work in Node apps, why conventional APM tools overlook the event loop and how to gain insight into the event loop in order to supercharge Node performance and application availability.

What is an event loop?

In an application, an event loop is essentially an orchestrator that is responsible for executing code in response to events.

The event loop is central to Node apps because Node.js uses an event-driven architecture. The event loop keeps track of events (like requests from clients), then uses a worker pool to respond to them. It handles both synchronous and asynchronous events – meaning that it's responsible for figuring out how to divvy up resources between events that are occurring in real time and those that initiated in the past but have not yet been fulfilled.

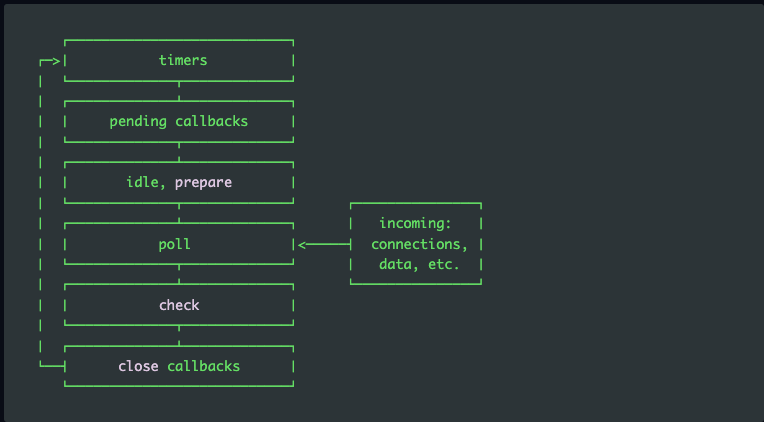

To help perform non-blocking I/O operations, the Node.js event loop can offload operations to the system kernel in many cases. That makes it possible to handle events in the background using a process that looks like this:

As you can see, there are several discrete steps in this process:

- Timers: Timers execute callbacks scheduled by setTimeout() and setInterval().

- Pending callbacks: Responsible for executing deferred callbacks.

- Idle, prepare: These are used internally.

- Poll: Responsible for identifying new I/O events and executing I/O-related callbacks.

- SetImmediate(): Defines functions to execute right away at the termination of the current event loop.

- Check: Handles the setImmediate() callbacks.

- Close callbacks: Executes callbacks of close events.

We're detailing the components of Node event loops not just so you understand how the event loop works in Node apps, but also because these components are all linked to app metrics that we can monitor – if we're able to monitor the event loop.

The scourge of event loop lag

We'll get into how to monitor event loops in a few moments. First, though, allow us to elaborate a bit more on why monitoring Node event loops is so important.

The reason – as we alluded to in the introduction – is that Node's single-threaded architecture means that synchronous code can dramatically degrade Node application performance metrics. And in most cases, you have no way of knowing whether synchronous code exists within a Node app that you're tasked with monitoring. Conventional Node monitoring tools might tell you that one of the API endpoints of you app is not responding to requests quickly enough in some cases, but they have no idea whether the issue's root cause has to do with synchronous work and an inefficient event loop usage on another API implementation, or a simply an inefficient or buggy implementation of the endpoint in question.

But if you can measure how long it takes the event loop to execute a callback once the callback has been scheduled, then it becomes possible to know whether event loop issues are the root cause of poor Node performance.

That measurement provides insight into what's known as event loop lag. Event loop lags tells you exactly how long it takes to execute a function after it has been scheduled. If synchronous code is slowing down execution, you'll be able to identify it by tracking event loop lag.

By monitoring Node event loop lag on a continuous basis, and configuring alerts to fire when the lag passes a certain threshold (say, 100 milliseconds), you can quickly identify Node performance issues that are linked to synchronous code. In turn, you can update your application or API code to improve Node performance metrics.

That sure beats wasting time trying to get to the root cause of Node performance problems through trial and error or guessing, which is what you'd be left doing if your application performance metrics tools aren't capable of catching event loop lag.

Monitoring Node.js event loop lag

How can you actually monitor event loop lag in Node.js apps? Here's sample code that lets you do it:

Sign up for Updates

Keep up with all things cloud-native observability.

We care about data. Check out our privacy policy.

.jpg)

.svg)