groundcover LLM Observability

Zero-instrumentation observability for modern LLM and agent-based workflows

Monitor, analyze, and secure your LLM-powered applications — including agents, tool calls, and prompt chains — with full-stack visibility and zero instrumentation. groundcover uses eBPF to deliver real-time insights into reasoning paths, token usage, and response quality without touching your code.

Why LLM observability matters

The age of single-turn LLMs is over. Today’s AI systems rely on multi-turn agents, retrieval-augmented pipelines, and tool-augmented workflows that make debugging and optimization much harder. Monitoring token usage and latency is no longer enough. You need to understand why an LLM response fails, how context drifts across turns, or when an agent misuses a tool — all without adding brittle SDKs to your stack.

groundcover is the only solution to deliver true LLM and agent observability out of the box — no instrumentation, no request limits, no sensitive data leaving your cloud.

Secure, end-to-end visibility for LLM and agentic applications

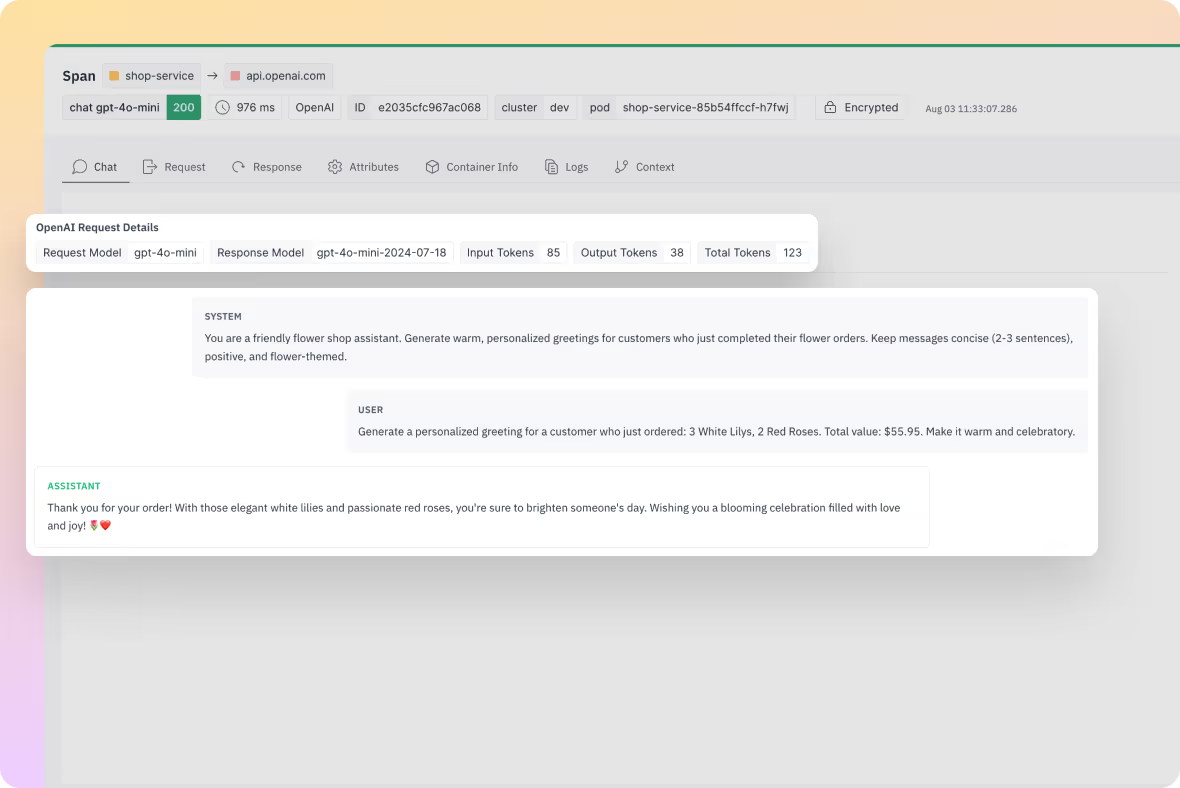

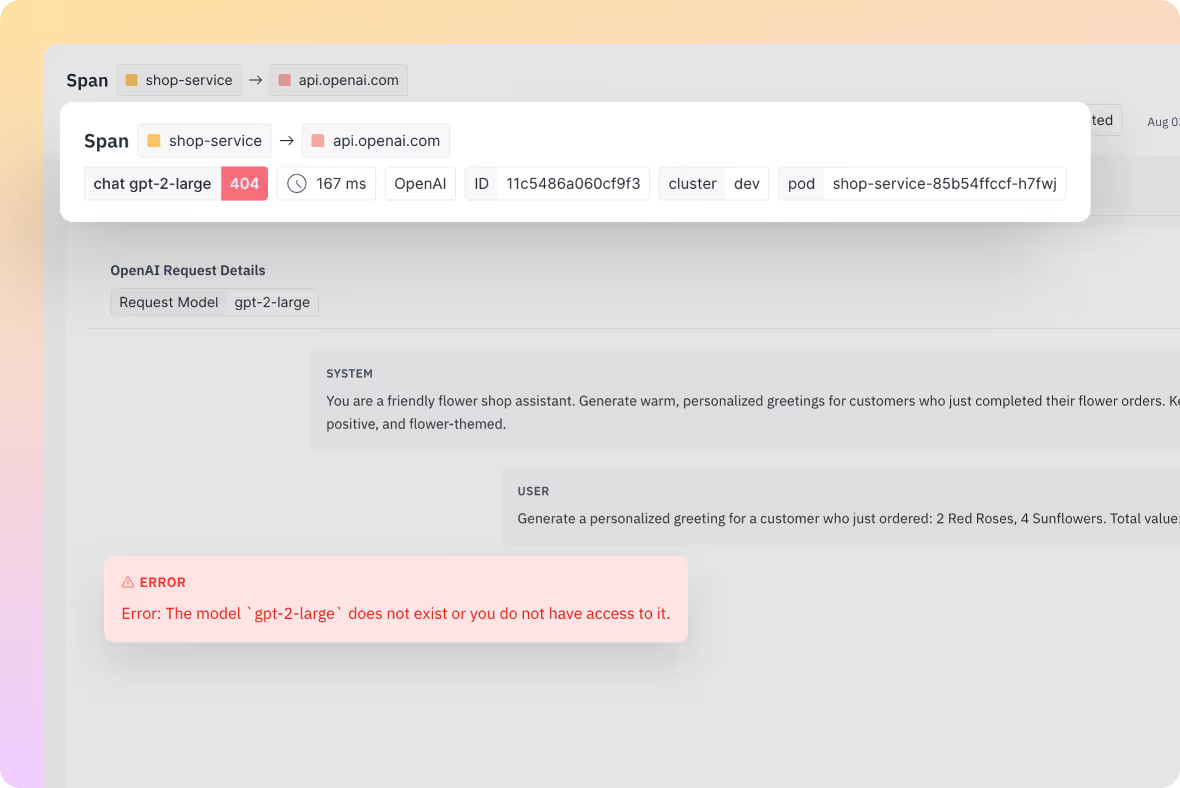

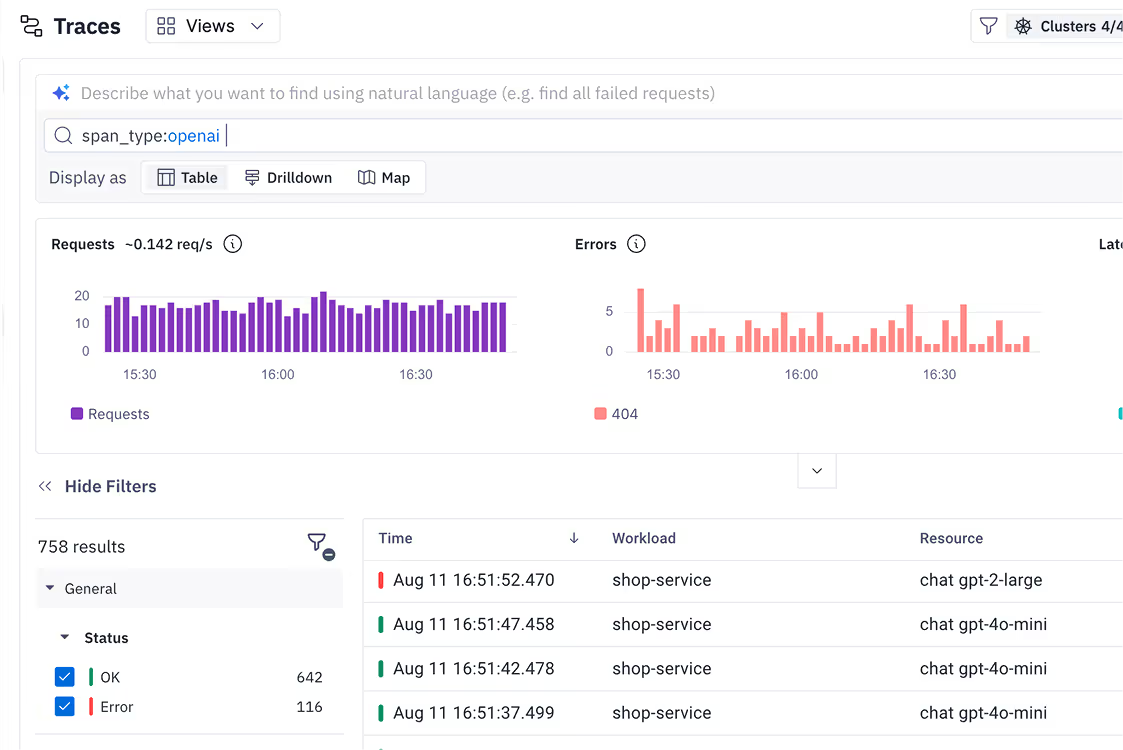

groundcover monitors every interaction with your LLMs, capturing full payloads, token usage, latency, throughput, and error patterns. But it doesn't stop at metrics. It captures behavior, allowing you to follow the reasoning path of a failed output, investigate prompt drift across a session, or isolate where a tool call introduced latency or degraded the response.

Unlike other platforms, groundcover is the only eBPF-based platform to achieve full visibility into API request & response, allowing observability and security practitioners to monitor the contents of LLM calls, identifying anomalies and sensitive data sent to 3rd party LLM providers.

Designed for real-world LLM workflows

Protect LLM pipelines from data exposure and security risks

All data captured by groundcover stays within your environment. There's no third-party storage, no outbound traffic, and no risk of exposing sensitive prompts that contain PII, completions, or user inputs. You maintain full control over data residency and compliance, with no trade-off on visibility.

This model is ideal for teams working with regulated data, internal copilots, or user-generated prompts, where security, privacy, and compliance are non-negotiable.

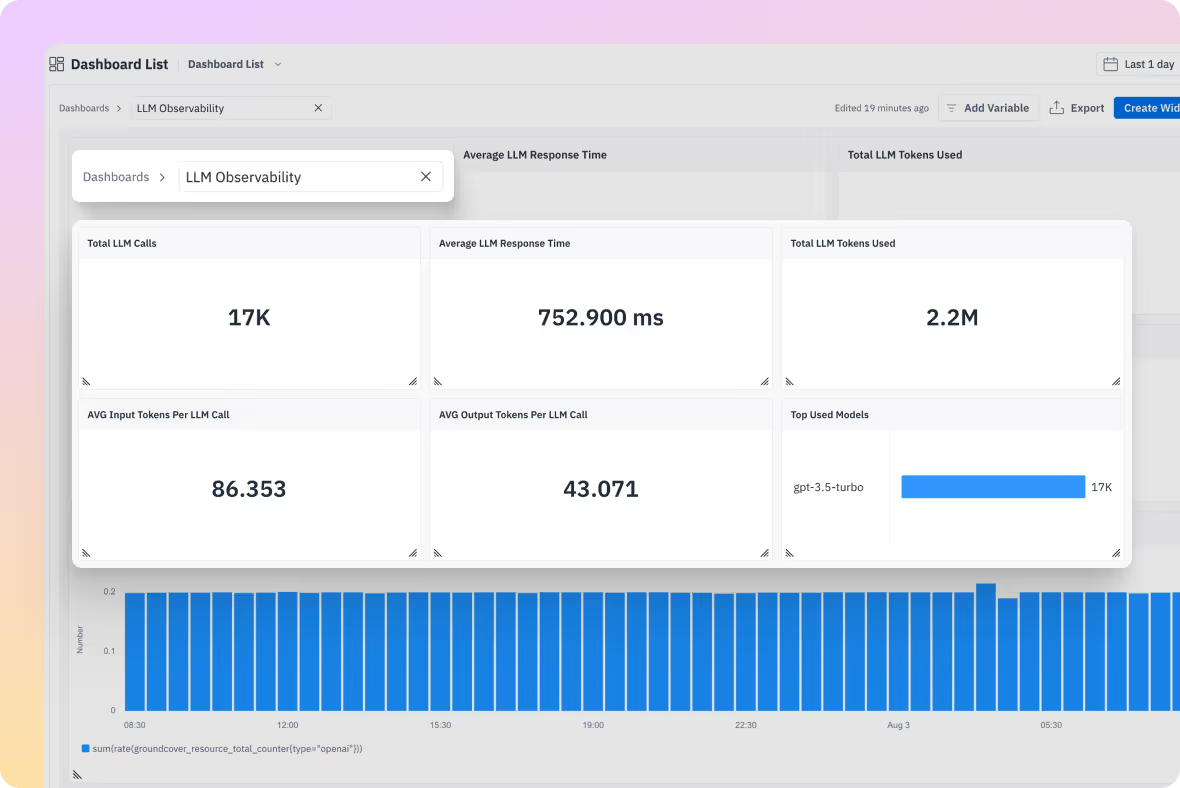

Improve performance and manage LLM spend

LLM workloads can be unpredictable and expensive. groundcover gives you instant access to token usage, latency, throughput, and error rates across every LLM interaction. Without writing a single line of code, you can pinpoint your most token-heavy use cases, track inefficient flows, and uncover hidden opportunities to reduce cost and improve response times. All insights are delivered out of the box, so you can move fast and optimize confidently.

Monitor LLM responses with full execution context

LLM responses rarely fail in isolation. groundcover captures the complete execution context behind every request including prompts, response payloads, tool usage, and session history so you can trace quality issues back to their source. Whether you're debugging hallucinations, drift, or inconsistent output, you’ll see not just what went wrong, but how and why it happened. This context is key to maintaining output quality in real-world, production environments.

How groundcover does it differently

See groundcover LLM observability in action

Deploy groundcover on your environment in minutes, or explore it on our data before lighting up your own cluster.

.svg)