Prometheus Scraping Explained: Efficient Data Collection in 2025

.png)

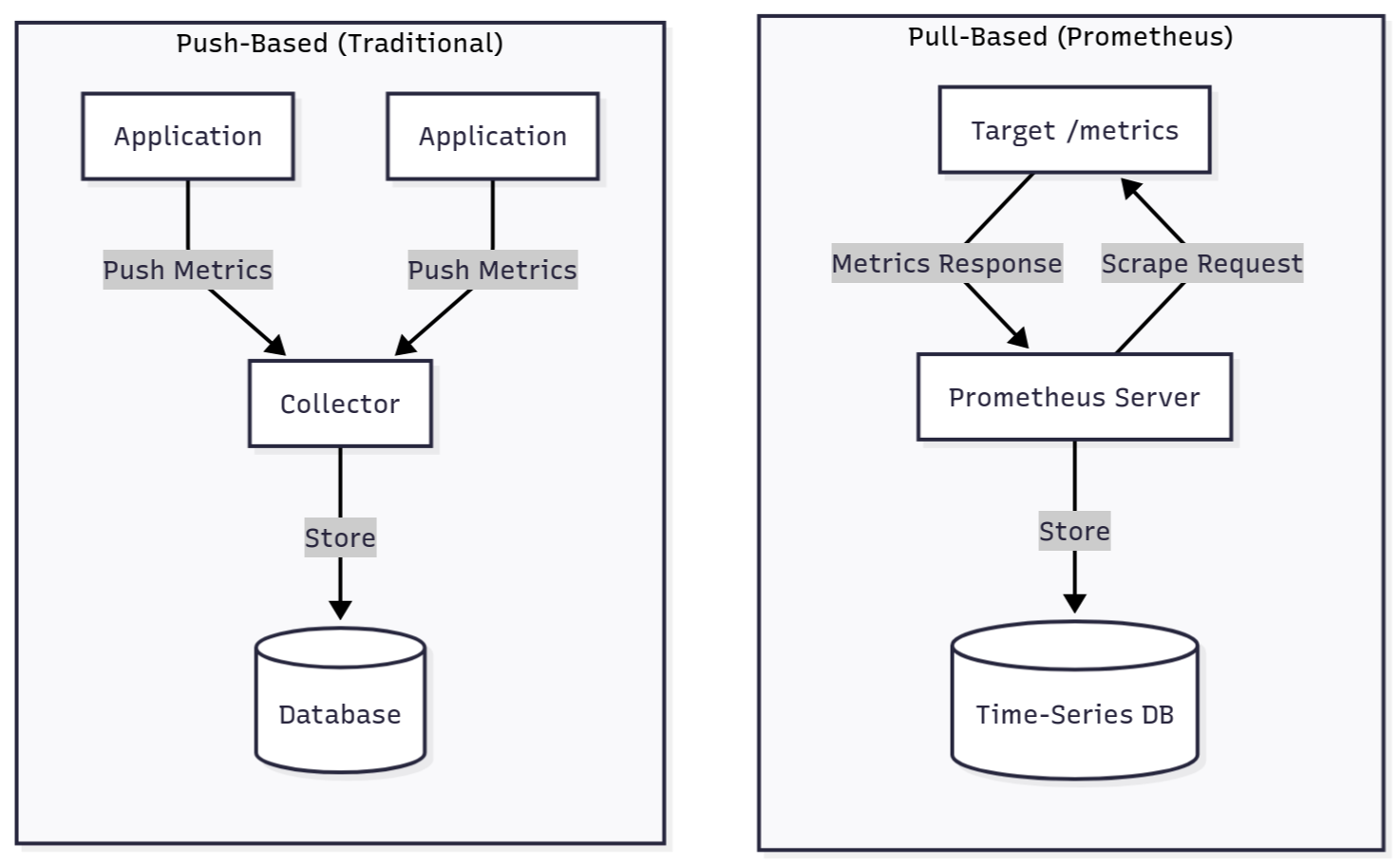

Here's what makes Prometheus different. It pulls metrics instead of waiting for them to show up (sometimes referred to as “push”). Your Prometheus server actively fetches data from targets, rather than sitting around hoping applications remember to send it. Each target exposes metrics at /metrics, which is just a text file Prometheus can read and store.

This inversion (server pulls, apps expose) makes monitoring more reliable. Prometheus controls when and how often collection happens, so detecting dead targets is straightforward. Applications don't need to know where to send metrics or maintain persistent connections. When a target breaks, Prometheus notices on the next scrape. You can curl any /metrics endpoint yourself to verify data independently.

As cloud-native architectures grow more complex in 2025, understanding how to configure, optimize, and troubleshoot Prometheus scraping is essential for maintaining production visibility. This guide covers scraping mechanics, configuration, and advanced techniques for building robust monitoring infrastructure.

What is Prometheus Scraping?

Prometheus scraping is pull-based. That means your Prometheus server doesn't wait for metrics to arrive. Instead, it goes and gets them. Every X seconds (you decide), Prometheus sends an HTTP GET request to /metrics on each target you've configured.

Unlike push-based systems, where applications send metrics to collectors, Prometheus inverts this relationship. The server initiates every scrape request. This gives you complete control over collection frequency and makes health detection straightforward.

This makes troubleshooting straightforward. When something breaks, you can curl the /metrics endpoint yourself, so there is no need to check Prometheus logs first. If the endpoint returns data when you query it manually, you know the target itself works and the problem is elsewhere. There is no guessing about whether metrics are being sent, received, or dropped somewhere in between.

How Prometheus Scraping Works

That's the concept. Now let's look at how it actually works under the hood.

The Scraping Process Step-by-Step

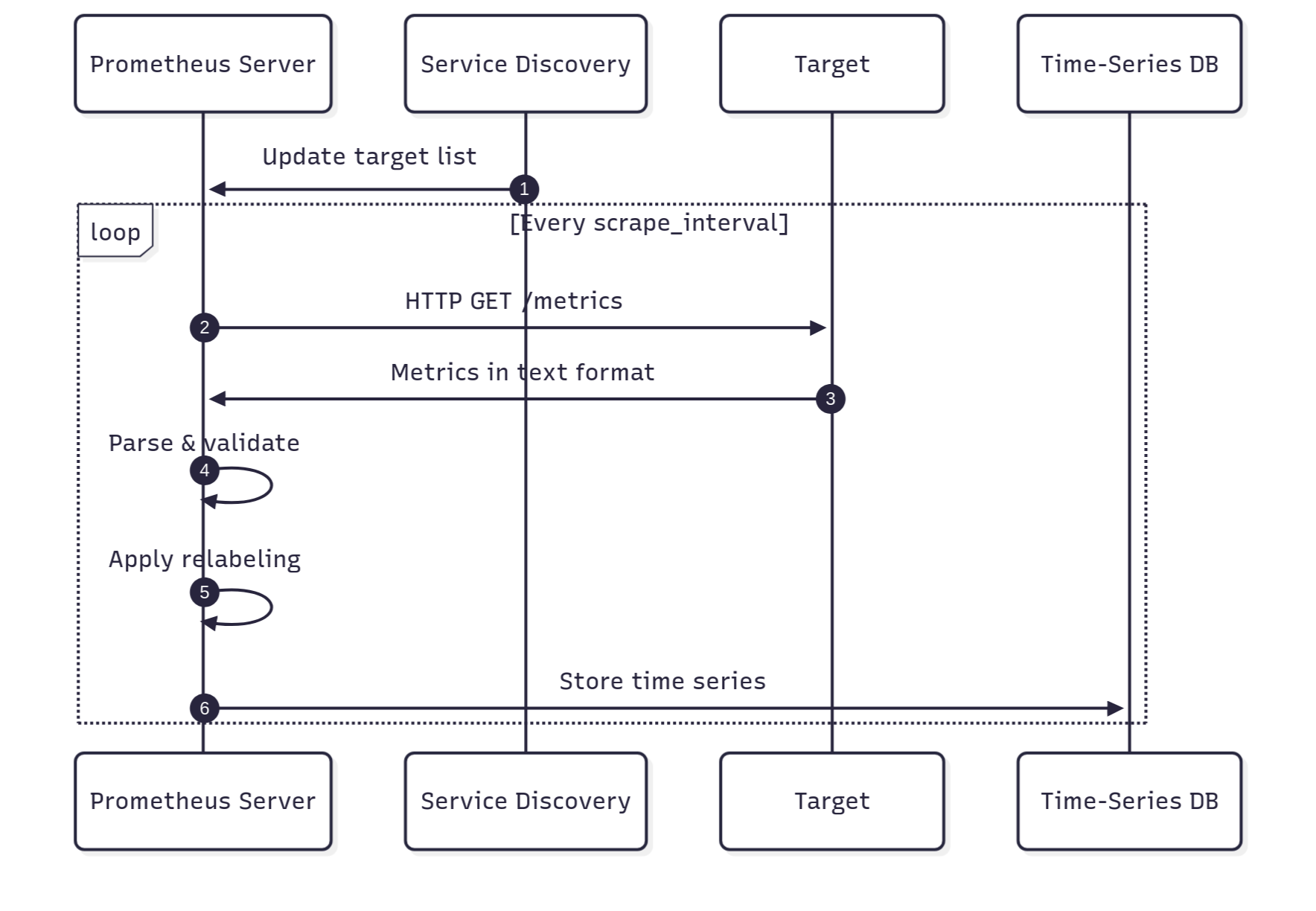

Prometheus scraping follows a simple loop. Here's what happens every time the scrape interval hits:

- Target Discovery: Prometheus figures out what to scrape. You can list targets manually in the config (static), or let service discovery find them automatically. In Kubernetes, where pods come and go constantly, service discovery saves you from updating configs every five minutes.

- Scrape Request: At each configured scrape_interval, Prometheus sends an HTTP GET request to each target's metrics endpoint.

- Metrics Collection: The target responds with metrics in Prometheus text format, which is a simple, human-readable format with metric names, labels, and values. The text format lets you debug by curling endpoints directly without specialized tools.

- Parsing and Validation: Prometheus parses the response, applies any configured metric relabeling rules, and then validates the format.

- Storage: Valid metrics get written to Prometheus' time-series database with timestamps, becoming available for PromQL queries. Data is stored in 2-hour blocks (chunks) that compact over time for efficient storage.

Metrics Exposition Format

Targets expose metrics in a standardized text format. Each metric has a name, optional labels for filtering, and a numeric value. Comments provide metadata about metric types (e.g, counter, gauge, histogram, or summary). Here's what it looks like:

This format follows the OpenMetrics spec, which defines the standard for how applications should expose metrics. That # TYPE line? It tells Prometheus whether this metric is a counter (only goes up), a gauge (goes up and down), a histogram (distribution of values), or a summary. This matters because different types need different PromQL queries.

The Role of the Prometheus Server

The Prometheus server orchestrates everything. It maintains the target list, schedules scrape jobs based on configured intervals, manages concurrent scraping across thousands of endpoints, and handles failures gracefully. When a target doesn't respond within scrape_timeout, Prometheus marks it as down and continues scraping other targets.

The server implements intelligent scrape scheduling that distributes requests evenly across the interval. With 1,000 targets and a 15-second interval, Prometheus staggers scrapes to avoid hitting all targets simultaneously. Otherwise, you'd create load spikes every 15 seconds.

Key Characteristics of Prometheus Scraping

- Pull-Based Architecture: Prometheus initiates all metrics collection, giving complete control over scraping frequency and target health detection.

- HTTP-Based Protocol: Scraping uses standard HTTP GET requests, making it simple to implement and debug with tools like curl.

- Time-Series Database (TSDB): Scraped data is stored in an efficient on-disk TSDB optimized for high-cardinality metrics, achieving approximately 1.3 bytes per sample through compression.

- Service Discovery Integration: Automatically discovers scrape targets through Kubernetes, Consul, EC2, and Azure without manual configuration.

- Multi-Dimensional Data Model: Labels attached to metrics enable powerful filtering and aggregation without requiring separate metric names.

- Efficient Storage: The TSDB uses delta encoding and compression to minimize storage requirements.

- Flexible Query Language: Scraped metrics become available for querying via PromQL for alerting and visualization.

Common Challenges in Prometheus Scraping

Prometheus scraping works great until it doesn't. Production throws challenges at you that compound fast. High cardinality silently consumes memory until your server OOMs. Dynamic targets disappear, and you lose visibility. Network latency causes scrape timeouts, which look like target failures and trigger false alerts. Here's what you're up against:

Most of these issues stem from configuration. Get your scrape settings right from the start, and you'll avoid firefighting later. Otherwise, by the time memory usage spikes due to cardinality, there is already fire on the mountain.

Optimizing Prometheus Scraping Configuration

Proper configuration is crucial for reliable, efficient scraping. The prometheus.yml configuration file controls all aspects of metrics collection.

Understanding the Configuration File Structure

The Prometheus configuration uses YAML syntax:

The global section sets defaults that apply unless overridden. Each scrape_configs entry defines a job with targets sharing the same configuration.

Setting Optimal Scrape Intervals

The scrape_interval controls how often Prometheus hits each target. Default is 60 seconds, but most production setups run faster:

- 15-30 seconds: Standard for most workloads, providing good resolution while keeping overhead manageable

- 5-10 seconds: High-frequency monitoring for critical services

- 60+ seconds: Long-term trends or stable infrastructure

Don't just copy-paste defaults. Think about what you're monitoring. Match intervals to how metrics actually change. Web apps serving requests? 15-30 seconds catches issues without overwhelming targets. Batch jobs running hourly? Don't scrape every 5 seconds, otherwise you'll generate 720 data points for a metric that doesn’t even change more than once. Pure waste.

Configuring Scrape Timeouts

scrape_timeout must be shorter than scrape_interval to prevent scrapes from backing up:

Timeouts at or above your interval cause scrapes to queue and skip, creating data gaps. Keep timeouts at 80-90% of the interval to allow completion before the next cycle.

Using Relabeling for Efficiency

Relabeling transforms or filters targets and metrics:

Relabeling happens in two places: relabel_configs filters targets before scraping, and metric_relabel_configs filters metrics after collection but before storage. Use metric relabeling to drop high-cardinality labels before they hit your Time Series Database (TSDB).

Scraping Metrics from Different Sources

Prometheus supports multiple mechanisms for discovering and scraping targets.

Static Targets Configuration

Static configuration defines targets explicitly:

Static configs work well for on-premise infrastructure or development environments where instances have fixed IP addresses. The labels you add here persist throughout the metric's lifecycle, making them useful for identifying the datacenter, environment, or team responsible for these targets.

Kubernetes Service Discovery

Kubernetes SD automatically finds pods and services:

The power of Kubernetes service discovery lies in the rich metadata it exposes. You can filter pods by namespace, label, annotation, or even container port. This example shows annotation-based opt-in scraping, a common pattern where pods add prometheus.io/scrape: "true" to enable monitoring.

Cloud Provider Service Discovery

Cloud SD finds instances based on platform APIs:

Cloud provider service discovery polls your cloud platform's API at regular intervals to discover instances. The filters prevent Prometheus from attempting to scrape every instance in your account (like here we're only targeting production EC2 instances that are currently running).

File-Based Service Discovery

File-based SD reads target lists from JSON or YAML:

File-based service discovery is useful when you're generating target lists with external tools or scripts. Prometheus watches these files and reloads targets automatically when they change.

Custom Exporters

Instrument custom applications with Prometheus client libraries:

Custom exporters let you expose application-specific metrics. The Prometheus client libraries (Go, Python, Java, etc.) handle the text format for you, and you just increment counters and set gauges.

Advanced Prometheus Scraping Techniques

- Metric Relabeling and Filtering: Use labelmap to copy Kubernetes metadata to permanent labels. Use hashmod for consistent hashing when sharding. Filter at scrape time with metric_relabel_configs. This way, metrics never enter storage, saving CPU and disk.

- Authentication Methods: Secure endpoints using basic auth, bearer tokens, or TLS with client certificates for mutual authentication.

- Using Exemplars: Exemplars link metrics to trace IDs. When you see a spike, jump straight to relevant traces in Jaeger or Tempo.

- Horizontal Sharding: Use hashmod relabeling to consistently assign targets to specific Prometheus instances when a single server can't handle the load.

- Remote Write: Send scraped metrics to compatible backends like Thanos or Cortex for long-term storage beyond Prometheus's retention period.

- Recording Rules: Pre-compute expensive queries and store results as new time series, particularly valuable for dashboard queries aggregating across many series.

Prometheus Scraping Errors and Troubleshooting

- Context Deadline Exceeded: Scrape timeout error.

Solution: Increase scrape_timeout, optimize target metric generation, or check network connectivity. - Connection Refused: Can't establish TCP connection. Target isn't running, wrong port, or network policies block access.

Solution: Verify target runs (curl http://target:port/metrics), check firewalls (nc -zv target port), ensure binding to 0.0.0.0 not 127.0.0.1. - 401 Unauthorized / 403 Forbidden: Authentication failure.

Solution: Verify credentials, check certificate expiration, and review basic_auth or bearer_token_file configuration. - Malformed Metrics Response: Non-conforming data format.

Solution: Curl the endpoint manually, validate against the Prometheus text format specification. - High Memory Usage: Excessive consumption due to high-cardinality metrics.

Solution: Use metric_relabel_configs to drop problematic labels, implement recording rules. - Scrape Target Down: Target marked as down (up = 0).

Solution: Check target health separately, review application logs, and verify network connectivity. - Too Many Samples: Sample limit exceeded error.

Solution: Set sample_limit in scrape config, investigate excessive metric generation, use filtering.

Best Practices for Secure and Reliable Prometheus Scraping

Implementing Prometheus scraping effectively requires following established patterns that balance performance, security, and operational simplicity.

These practices work together to create a resilient monitoring infrastructure. Service discovery updates your targets automatically while metric relabeling controls cardinality as new services appear. Authentication secures endpoints, and TLS protects data in transit. Treat this as a system, not a checklist.

Simplifying Prometheus Scraping with groundcover

Managing Prometheus scraping at scale gets tedious fast. You're updating scrape configs constantly as services deploy. You're fighting cardinality explosions from a single misconfigured label. You're troubleshooting why targets intermittently time out. When you're running thousands of targets across multiple clusters, the configuration overhead becomes operationally painful.

In addition to supporting scraping custom metric, groundcover takes a different approach. Instead of HTTP-based scraping, it uses eBPF to capture metrics directly from the kernel. This means automatic service discovery, for example, new pods get monitored the moment they start, without touching your configs. It also means zero scrape overhead since there's no HTTP request/response cycle.

The eBPF approach captures metrics with minimal performance impact, enabling higher-frequency collection than traditional scraping allows. It deploys seamlessly using Helm charts, automatically integrating with Kubernetes RBAC and network policies.

The real win? groundcover correlates metrics with traces and logs automatically. When you spot a latency spike in a metric, you can jump straight to the relevant traces in seconds, not minutes of manual correlation. This unified observability happens without integrating multiple separate tools or maintaining complex pipelines.

It's particularly valuable when managing large Kubernetes environments where traditional Prometheus scraping requires constant configuration maintenance, while maintaining full compatibility with Prometheus and PromQL for your existing dashboards and alerts.

Conclusion

Prometheus scraping provides the foundation for reliable monitoring in cloud-native environments. Knowing how scraping works, configuring optimal intervals and relabeling rules, implementing service discovery, and following security best practices allows you to build a monitoring infrastructure that scales with your applications. The challenges of high cardinality, dynamic targets, and distributed architectures are manageable with proper planning and the right tools.

FAQs

How can I reduce scrape load and improve Prometheus performance at scale?

You can reduce load by optimizing both collection frequency and data volume.

- Adjust Intervals: Increase the scrape_interval for metrics that don't change often (e.g., infrastructural metrics every 60s instead of 15s).

- Drop Metrics: Use metric relabeling (metric_relabel_configs) to drop high-cardinality labels or unused metrics before they hit the Time Series Database (TSDB).

- Scale Horizontally: Implement federation (multiple Prometheus servers scrape different targets) or remote write to offload long-term storage and handle large loads.

- Monitor Health: Use queries like rate(prometheus_tsdb_head_samples_appended_total[5m]) (ingestion rate) and prometheus_tsdb_head_series (current series count) to track resource usage.

What's the difference between Prometheus federation and scraping, and when should each be used?

Scraping and federation serve different scaling purposes within a Prometheus environment.

- Scraping: This is the primary collection mechanism where the server pulls metrics directly from application endpoints (/metrics).

- Use Case: Direct monitoring of applications and infrastructure health.

- Federation: This is a hierarchical architecture where one Prometheus server scrapes selected time series from another Prometheus server's /federate endpoint.

- Use Case: Aggregating metrics across multiple regional clusters or creating a global view without overwhelming a single instance.

How does groundcover enhance Prometheus scraping in cloud-native environments?

groundcover replaces the standard HTTP pull mechanism with a kernel-based approach.

- Technology: groundcover uses eBPF technology to capture metrics directly from the Linux kernel, bypassing traditional HTTP-based scraping.

- Configuration: This eliminates configuration overhead through automatic service discovery of new pods and containers.

- Performance: It dramatically reduces performance impact since there is no HTTP request overhead, allowing for higher-frequency data collection.

- Observability: groundcover correlates metrics with distributed traces and logs automatically, providing unified observability without integrating multiple separate tools.

.svg)